As of 6.6 release, the JavaScript Transporter Data Feed functionality released in 6.4 became even more flexible and powerful with the addition of an application managed output writer. This new capability removes the need to work around the inherent heap memory constraints of JS Node engine. This feature will allow you to ingest larger data sets in a single feed.

Multi-Step JavaScript Transporter Refresher

Let’s start off with a quick refresher of JavaScript Transporter.

- Multi-Step JavaScript Transporter gives users the freedom to use JavaScript to feed data into Archer with its various capabilities:

- Native web request methods to make API requests

- Native parsers for both XML and JSON

- Easy syntax

- These capabilities resulted in:

- Ability to make multiple API calls within a single Data Feed

- Ability to string multiple dependent API calls together removes the need to develop and deploy custom-designed API middle-ware components.

- Ability to implement more complex integrations with Archer Data Feeds.

- Memory constraints prior to 6.6 release

- Original implementation of JavaScript Transporter allowed for only a one time write to disk before moving onto the next step of the data feed.

- This meant the entire output structure how to be built and stored in a single variable before being passed onto the Transform step of the data feed.

- The JS Node engine the transporter leverages has a built in limit of 268 MB for string variables or 2 GB for binary variables

- This made managing large data sets returned by external API’s difficult sometimes in resulting in needing to break up the ingestion into several data feed runs.

6.6 Solution - Application Managed Output Writer

- New custom function provides an output writer that is managed by the data feed application at run time.

- Allows user to provide data as items that can be processed individually, and then data feed application runtime shelves them into multiple files that can be processed independently in the next step.

- No limitation on amount of data that Archer data feed can process, since files can be loaded and processed independently.

- Output Writer internally handles the formatting of data for different data types, writing the data to file(s), and the creation of a manifest file which is used in the next step of the data feed.

- Temporary files created by output writer are automatically cleaned up when data feed completes.

Output Writer Instantiation

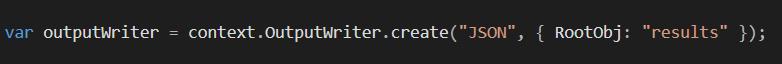

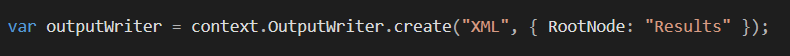

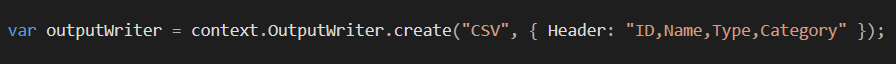

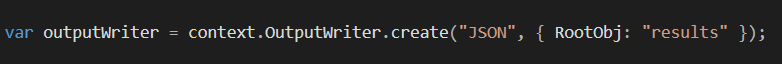

The instantiation of an output writer is required to use this feature and this instantiation can only occur once in any user script. The output writer supports three types: JSON, XML, and CSV. The instantiation takes two parameters: type and initParams. The type of output writer controls how the transporter formats the data. The initParams are limited to RootObj, RootNode, or Header for JSON, XML, CSV types respectively.

Here is an example of each type:

JSON:

XML:

CSV:

Leveraging Output Writer In Your Script

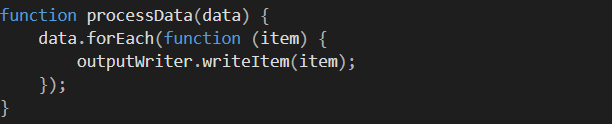

1. Creation of output writer

2. Write items to file using the output writer instance. The script can write to file as many times as necessary. Typically the output writer would replace code you use to build the output variable in your current script.

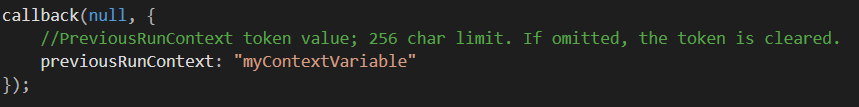

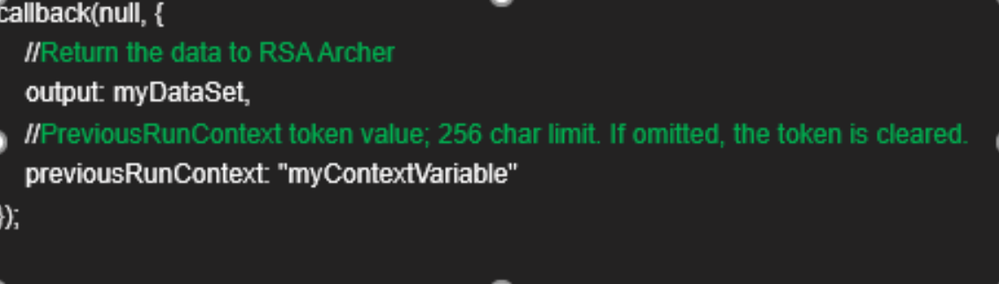

3. Since the output writer has already flushed the necessary data to files available for the next step, there is no need to pass data feed output variable in the callback.

It is important to note that the original method of passing data through an output variable is still supported. Any existing script will continue to execute exactly as it does prior to 6.6.

As you can see, however, when dealing with large data sets being able to periodically write data to a file is a huge advantage. I am excited to see how this feature is leveraged to quickly integrate more coveted information from even more data source into your Risk Management solution.